commit

8d80c6b1e1

17 changed files with 2044 additions and 1542 deletions

|

|

@ -19,7 +19,7 @@ WORKDIR /app

|

||||||

|

|

||||||

# run crond as main process of container

|

# run crond as main process of container

|

||||||

ENTRYPOINT ["/bin/sh", "/app/entrypoint.sh"]

|

ENTRYPOINT ["/bin/sh", "/app/entrypoint.sh"]

|

||||||

CMD ["dishStatusInflux.py"]

|

CMD ["dish_grpc_influx.py status alert_detail"]

|

||||||

|

|

||||||

# docker run -d --name='starlink-grpc-tools' -e INFLUXDB_HOST=192.168.1.34 -e INFLUXDB_PORT=8086 -e INFLUXDB_DB=starlink

|

# docker run -d --name='starlink-grpc-tools' -e INFLUXDB_HOST=192.168.1.34 -e INFLUXDB_PORT=8086 -e INFLUXDB_DB=starlink

|

||||||

# --net='br0' --ip='192.168.1.39' neurocis/starlink-grpc-tools dishStatusInflux.py

|

# --net='br0' --ip='192.168.1.39' neurocis/starlink-grpc-tools dish_grpc_influx.py status alert_detail

|

||||||

|

|

|

||||||

46

README.md

46

README.md

|

|

@ -38,7 +38,7 @@ python parseJsonHistory.py -h

|

||||||

|

|

||||||

When used as-is, `parseJsonHistory.py` will summarize packet loss information from the data the dish records. There's other bits of data in there, though, so that script (or more likely the parsing logic it uses, which now resides in `starlink_json.py`) could be used as a starting point or example of how to iterate through it. Most of the data displayed in the Statistics page of the Starlink app appears to come from this same `get_history` gRPC response. See the file `get_history_notes.txt` for some ramblings on how to interpret it.

|

When used as-is, `parseJsonHistory.py` will summarize packet loss information from the data the dish records. There's other bits of data in there, though, so that script (or more likely the parsing logic it uses, which now resides in `starlink_json.py`) could be used as a starting point or example of how to iterate through it. Most of the data displayed in the Statistics page of the Starlink app appears to come from this same `get_history` gRPC response. See the file `get_history_notes.txt` for some ramblings on how to interpret it.

|

||||||

|

|

||||||

The one bit of functionality this script has over the grpc scripts is that it supports capturing the grpcurl output to a file and reading from that, which may be useful if you're collecting data in one place but analyzing it in another. Otherwise, it's probably better to use `dishHistoryStats.py`, described below.

|

The one bit of functionality this script has over the grpc scripts is that it supports capturing the grpcurl output to a file and reading from that, which may be useful if you're collecting data in one place but analyzing it in another. Otherwise, it's probably better to use `dish_grpc_text.py`, described below.

|

||||||

|

|

||||||

### The grpc scripts

|

### The grpc scripts

|

||||||

|

|

||||||

|

|

@ -57,52 +57,60 @@ python3 -m grpc_tools.protoc --descriptor_set_in=../dish.protoset --python_out=.

|

||||||

```

|

```

|

||||||

Then move the resulting files to where the Python scripts can find them in the import path, such as in the same directory as the scripts themselves.

|

Then move the resulting files to where the Python scripts can find them in the import path, such as in the same directory as the scripts themselves.

|

||||||

|

|

||||||

Once those are available, the `dishHistoryStats.py` script can be used in place of the `grpcurl | parseJsonHistory.py` pipeline, with most of the same command line options. For example:

|

Once those are available, the `dish_grpc_text.py` script can be used in place of the `grpcurl | parseJsonHistory.py` pipeline; however, the command line interface is slightly different because `dish_grpc_text.py` supports additional functionality. The equivalent command to `grpcurl | parseJsonHistory.py` would be:

|

||||||

```

|

```

|

||||||

python3 parseHistoryStats.py

|

python3 dish_grpc_text.py ping_drop

|

||||||

```

|

```

|

||||||

|

|

||||||

By default, `parseHistoryStats.py` (and `parseJsonHistory.py`) will output the stats in CSV format. You can use the `-v` option to instead output in a (slightly) more human-readable format.

|

By default, `dish_grpc_text.py` (and `parseJsonHistory.py`) will output in CSV format. You can use the `-v` option to instead output in a (slightly) more human-readable format.

|

||||||

|

|

||||||

To collect and record summary stats at the top of every hour, you could put something like the following in your user crontab (assuming you have moved the scripts to ~/bin and made them executable):

|

To collect and record packet loss summary stats at the top of every hour, you could put something like the following in your user crontab (assuming you have moved the scripts to ~/bin and made them executable):

|

||||||

```

|

```

|

||||||

00 * * * * [ -e ~/dishStats.csv ] || ~/bin/dishHistoryStats.py -H >~/dishStats.csv; ~/bin/dishHistoryStats.py >>~/dishStats.csv

|

00 * * * * [ -e ~/dishStats.csv ] || ~/bin/dish_grpc_text.py -H >~/dishStats.csv; ~/bin/dish_grpc_text.py ping_drop >>~/dishStats.csv

|

||||||

```

|

```

|

||||||

|

|

||||||

`dishHistoryInflux.py` and `dishHistoryMqtt.py` are similar, but they send their output to an InfluxDB server and a MQTT broker, respectively. Run them with `-h` command line option for details on how to specify server and/or database options.

|

`dish_grpc_influx.py`, `dish_grpc_sqlite.py`, and `dish_grpc_mqtt.py` are similar, but they send their output to an InfluxDB server, a sqlite database, and a MQTT broker, respectively. Run them with `-h` command line option for details on how to specify server and/or database options.

|

||||||

|

|

||||||

`dishStatusCsv.py`, `dishStatusInflux.py`, and `dishStatusMqtt.py` output the status data instead of history data, to various data backends. The information they pull is mostly what appears related to the dish in the Debug Data section of the Starlink app. As with the corresponding history scripts, run them with `-h` command line option for usage details.

|

All 4 scripts support processing status data in addition to the history data. The status data is mostly what appears related to the dish in the Debug Data section of the Starlink app. Specific status or history data groups can be selected by including their mode names on the command line. Run the scripts with `-h` command line option to get a list of available modes. See the documentation at the top of `starlink_grpc.py` for detail on what each of the fields means within each mode group.

|

||||||

|

|

||||||

By default, all of these scripts will pull data once, send it off to the specified data backend, and then exit. They can instead be made to run in a periodic loop by passing a `-t` option to specify loop interval, in seconds. For example, to capture status information to a InfluxDB server every 30 seconds, you could do something like this:

|

By default, all of these scripts will pull data once, send it off to the specified data backend, and then exit. They can instead be made to run in a periodic loop by passing a `-t` option to specify loop interval, in seconds. For example, to capture status information to a InfluxDB server every 30 seconds, you could do something like this:

|

||||||

```

|

```

|

||||||

python3 dishStatusInflux.py -t 30 [... probably other args to specify server options ...]

|

python3 dish_grpc_influx.py -t 30 [... probably other args to specify server options ...] status

|

||||||

```

|

```

|

||||||

|

|

||||||

Some of the scripts (currently only the InfluxDB ones) also support specifying options through environment variables. See details in the scripts for the environment variables that map to options.

|

Some of the scripts (currently only the InfluxDB one) also support specifying options through environment variables. See details in the scripts for the environment variables that map to options.

|

||||||

|

|

||||||

#### Bulk history data collection

|

#### Bulk history data collection

|

||||||

|

|

||||||

`dishStatusInflux.py` also supports a bulk mode that collects and writes the full second-by-second data to the server instead of summary stats. To select bulk mode, use the `-b` option. You'll probably also want to use the `-t` option to have it run in a loop.

|

`dish_grpc_influx.py`, `dish_grpc_sqlite.py`, and `dish_grpc_text.py` also support a bulk history mode that collects and writes the full second-by-second data instead of summary stats. To select bulk mode, use `bulk_history` for the mode argument. You'll probably also want to use the `-t` option to have it run in a loop.

|

||||||

|

|

||||||

### Other scripts

|

### Other scripts

|

||||||

|

|

||||||

`dishDumpStatus.py` is a simple example of how to use the grpc modules (the ones generated by protoc, not `starlink_grpc.py`) directly. Just run it as:

|

`dump_dish_status.py` is a simple example of how to use the grpc modules (the ones generated by protoc, not `starlink_grpc`) directly. Just run it as:

|

||||||

```

|

```

|

||||||

python3 dishDumpStatus.py

|

python3 dump_dish_status.py

|

||||||

```

|

```

|

||||||

and revel in copious amounts of dish status information. OK, maybe it's not as impressive as all that. This one is really just meant to be a starting point for real functionality to be added to it.

|

and revel in copious amounts of dish status information. OK, maybe it's not as impressive as all that. This one is really just meant to be a starting point for real functionality to be added to it.

|

||||||

|

|

||||||

|

`poll_history.py` is another silly example, but this one illustrates how to periodically poll the status and/or bulk history data using the `starlink_grpc` module's API. It's not really useful by itself, but if you really want to, you can run it as:

|

||||||

|

```

|

||||||

|

python3 poll_history.py

|

||||||

|

```

|

||||||

Possibly more simple examples to come, as the other scripts have started getting a bit complicated.

|

Possibly more simple examples to come, as the other scripts have started getting a bit complicated.

|

||||||

|

|

||||||

## To Be Done (Maybe)

|

## To Be Done (Maybe)

|

||||||

|

|

||||||

|

Maybe more data backend options. If there's one you'd like to see supported, please open a feature request issue.

|

||||||

|

|

||||||

There are `reboot` and `dish_stow` requests in the Device protocol, too, so it should be trivial to write a command that initiates dish reboot and stow operations. These are easy enough to do with `grpcurl`, though, as there is no need to parse through the response data. For that matter, they're easy enough to do with the Starlink app.

|

There are `reboot` and `dish_stow` requests in the Device protocol, too, so it should be trivial to write a command that initiates dish reboot and stow operations. These are easy enough to do with `grpcurl`, though, as there is no need to parse through the response data. For that matter, they're easy enough to do with the Starlink app.

|

||||||

|

|

||||||

Proper Python packaging, since some of the scripts are no longer self-contained.

|

Proper Python packaging, since some of the scripts are no longer self-contained.

|

||||||

|

|

||||||

|

The requirement to run `grpcurl` and `protoc` could be eliminated by adding support for use of gRPC server reflection directly in the grpc scripts. This would sidestep any packaging questions about whether or not the protoc-generated files could be redistributed.

|

||||||

|

|

||||||

## Other Tidbits

|

## Other Tidbits

|

||||||

|

|

||||||

The Starlink Android app actually uses port 9201 instead of 9200. Both appear to expose the same gRPC service, but the one on port 9201 uses an HTTP/1.1 wrapper, whereas the one on port 9200 uses HTTP/2.0, which is what most gRPC tools expect.

|

The Starlink Android app actually uses port 9201 instead of 9200. Both appear to expose the same gRPC service, but the one on port 9201 uses [gRPC-Web](https://github.com/grpc/grpc/blob/master/doc/PROTOCOL-WEB.md), which can use HTTP/1.1, whereas the one on port 9200 uses HTTP/2, which is what most gRPC tools expect.

|

||||||

|

|

||||||

The Starlink router also exposes a gRPC service, on ports 9000 (HTTP/2.0) and 9001 (HTTP/1.1).

|

The Starlink router also exposes a gRPC service, on ports 9000 (HTTP/2.0) and 9001 (HTTP/1.1).

|

||||||

|

|

||||||

|

|

@ -116,13 +124,17 @@ docker run -d -t --name='starlink-grpc-tools' -e INFLUXDB_HOST={InfluxDB Hostnam

|

||||||

-e INFLUXDB_USER={Optional, InfluxDB Username} \

|

-e INFLUXDB_USER={Optional, InfluxDB Username} \

|

||||||

-e INFLUXDB_PWD={Optional, InfluxDB Password} \

|

-e INFLUXDB_PWD={Optional, InfluxDB Password} \

|

||||||

-e INFLUXDB_DB={Pre-created DB name, starlinkstats works well} \

|

-e INFLUXDB_DB={Pre-created DB name, starlinkstats works well} \

|

||||||

neurocis/starlink-grpc-tools dishStatusInflux.py -v

|

neurocis/starlink-grpc-tools dish_grpc_influx.py -v status alert_detail

|

||||||

```

|

```

|

||||||

|

|

||||||

The `-t` option to `docker run` will prevent Python from buffering the script's standard output and can be omitted if you don't care about seeing the verbose output in the container logs as soon as it is printed.

|

The `-t` option to `docker run` will prevent Python from buffering the script's standard output and can be omitted if you don't care about seeing the verbose output in the container logs as soon as it is printed.

|

||||||

|

|

||||||

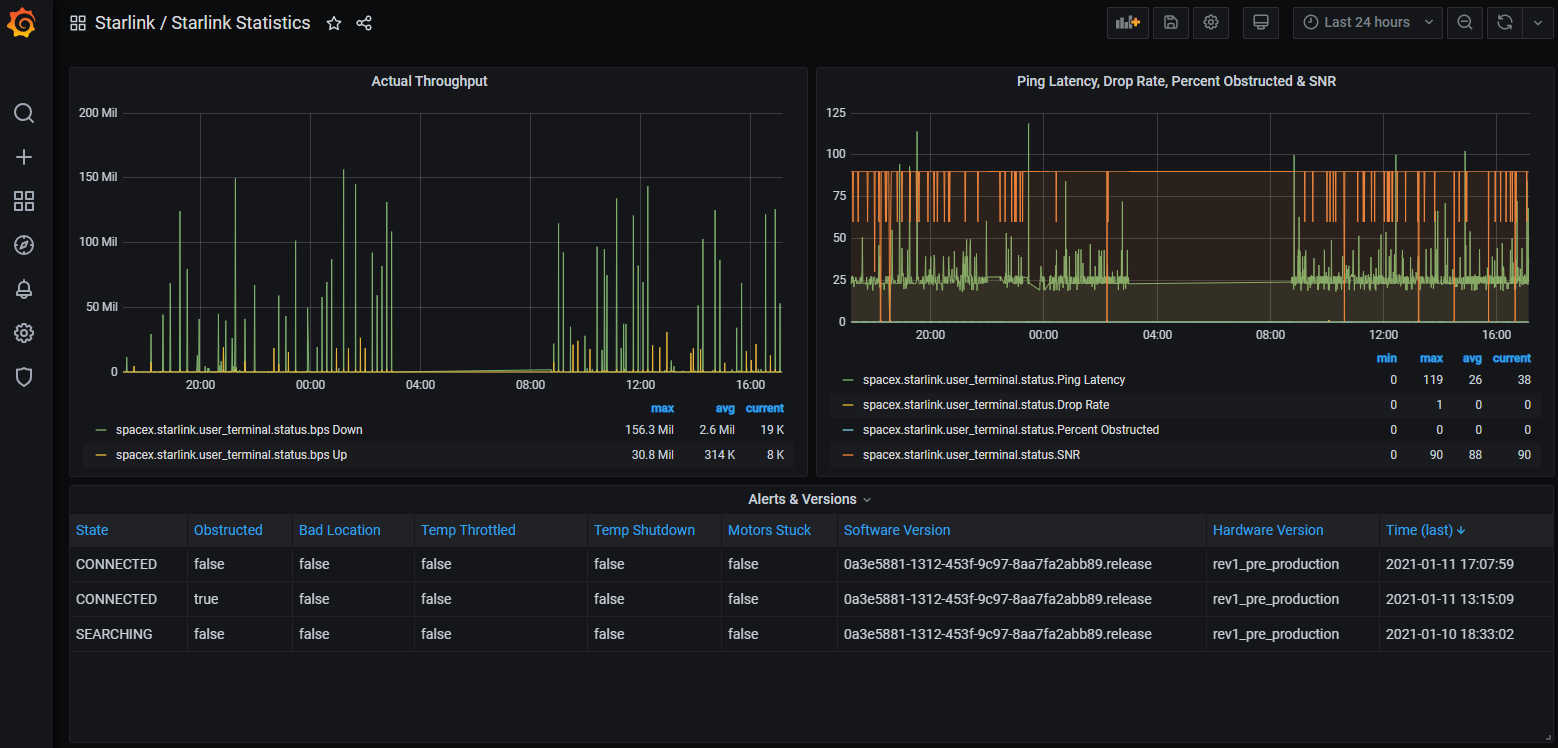

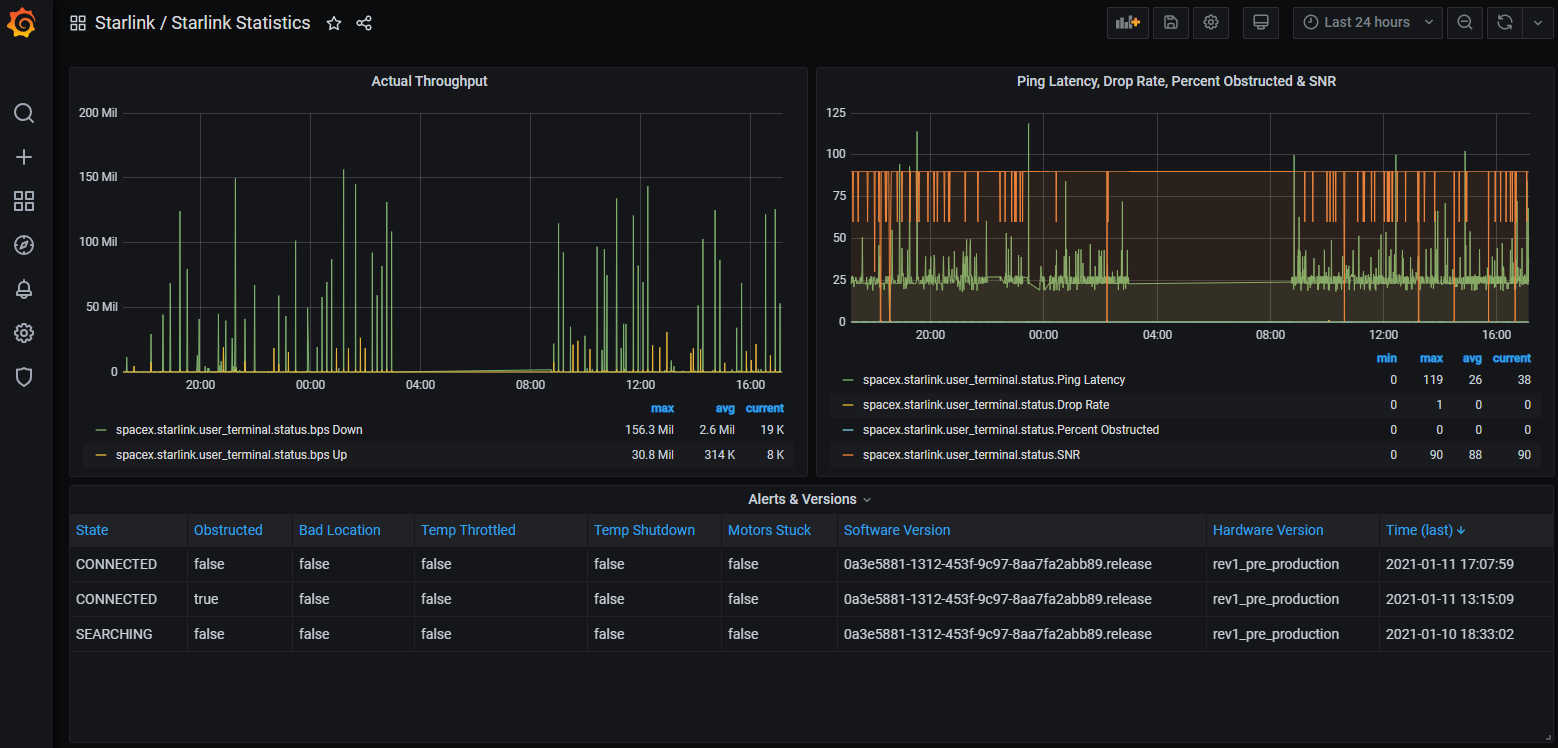

The `dishStatusInflux.py -v` is optional and omitting it will run same but not verbose, or you can replace it with one of the other scripts if you wish to run that instead, or use other command line options. There is also a `GrafanaDashboard - Starlink Statistics.json` which can be imported to get some charts like:

|

The `dish_grpc_influx.py -v status alert_detail` is optional and omitting it will run same but not verbose, or you can replace it with one of the other scripts if you wish to run that instead, or use other command line options. There is also a `GrafanaDashboard - Starlink Statistics.json` which can be imported to get some charts like:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

You'll probably want to run with the `-t` option to `dishStatusInflux.py` to collect status information periodically for this to be meaningful.

|

You'll probably want to run with the `-t` option to `dish_grpc_influx.py` to collect status information periodically for this to be meaningful.

|

||||||

|

|

||||||

|

## Related Projects

|

||||||

|

|

||||||

|

[ChuckTSI's Better Than Nothing Web Interface](https://github.com/ChuckTSI/BetterThanNothingWebInterface) uses grpcurl and PHP to provide a spiffy web UI for some of the same data this project works on.

|

||||||

|

|

|

||||||

|

|

@ -1,26 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Simple example of how to poll the get_status request directly using

|

|

||||||

# grpc calls.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

import grpc

|

|

||||||

|

|

||||||

import spacex.api.device.device_pb2

|

|

||||||

import spacex.api.device.device_pb2_grpc

|

|

||||||

|

|

||||||

# Note that if you remove the 'with' clause here, you need to separately

|

|

||||||

# call channel.close() when you're done with the gRPC connection.

|

|

||||||

with grpc.insecure_channel("192.168.100.1:9200") as channel:

|

|

||||||

stub = spacex.api.device.device_pb2_grpc.DeviceStub(channel)

|

|

||||||

response = stub.Handle(spacex.api.device.device_pb2.Request(get_status={}))

|

|

||||||

|

|

||||||

# Dump everything

|

|

||||||

print(response)

|

|

||||||

|

|

||||||

## Just the software version

|

|

||||||

#print(response.dish_get_status.device_info.software_version)

|

|

||||||

|

|

||||||

## Check if connected

|

|

||||||

#print("Connected" if response.dish_get_status.state == spacex.api.device.dish_pb2.DishState.CONNECTED else "Not connected")

|

|

||||||

|

|

@ -1,414 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Write Starlink user terminal packet loss, latency, and usage data

|

|

||||||

# to an InfluxDB database.

|

|

||||||

#

|

|

||||||

# This script examines the most recent samples from the history data,

|

|

||||||

# and either writes them in whole, or computes several different

|

|

||||||

# metrics related to packet loss and writes those, to the specified

|

|

||||||

# InfluxDB database.

|

|

||||||

#

|

|

||||||

# NOTE: The Starlink user terminal does not include time values with

|

|

||||||

# its history or status data, so this script uses current system time

|

|

||||||

# to compute the timestamps it sends to InfluxDB. It is recommended

|

|

||||||

# to run this script on a host that has its system clock synced via

|

|

||||||

# NTP. Otherwise, the timestamps may get out of sync with real time.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

|

|

||||||

import getopt

|

|

||||||

from datetime import datetime

|

|

||||||

from datetime import timezone

|

|

||||||

import logging

|

|

||||||

import os

|

|

||||||

import signal

|

|

||||||

import sys

|

|

||||||

import time

|

|

||||||

import warnings

|

|

||||||

|

|

||||||

from influxdb import InfluxDBClient

|

|

||||||

|

|

||||||

import starlink_grpc

|

|

||||||

|

|

||||||

BULK_MEASUREMENT = "spacex.starlink.user_terminal.history"

|

|

||||||

PING_MEASUREMENT = "spacex.starlink.user_terminal.ping_stats"

|

|

||||||

MAX_QUEUE_LENGTH = 864000

|

|

||||||

|

|

||||||

|

|

||||||

class Terminated(Exception):

|

|

||||||

pass

|

|

||||||

|

|

||||||

|

|

||||||

def handle_sigterm(signum, frame):

|

|

||||||

# Turn SIGTERM into an exception so main loop can clean up

|

|

||||||

raise Terminated()

|

|

||||||

|

|

||||||

|

|

||||||

def main():

|

|

||||||

arg_error = False

|

|

||||||

|

|

||||||

try:

|

|

||||||

opts, args = getopt.getopt(sys.argv[1:], "abhkn:p:rs:t:vC:D:IP:R:SU:")

|

|

||||||

except getopt.GetoptError as err:

|

|

||||||

print(str(err))

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

# Default to 1 hour worth of data samples.

|

|

||||||

samples_default = 3600

|

|

||||||

samples = None

|

|

||||||

print_usage = False

|

|

||||||

verbose = False

|

|

||||||

default_loop_time = 0

|

|

||||||

loop_time = default_loop_time

|

|

||||||

bulk_mode = False

|

|

||||||

bulk_skip_query = False

|

|

||||||

run_lengths = False

|

|

||||||

host_default = "localhost"

|

|

||||||

database_default = "starlinkstats"

|

|

||||||

icargs = {"host": host_default, "timeout": 5, "database": database_default}

|

|

||||||

rp = None

|

|

||||||

flush_limit = 6

|

|

||||||

max_batch = 5000

|

|

||||||

|

|

||||||

# For each of these check they are both set and not empty string

|

|

||||||

influxdb_host = os.environ.get("INFLUXDB_HOST")

|

|

||||||

if influxdb_host:

|

|

||||||

icargs["host"] = influxdb_host

|

|

||||||

influxdb_port = os.environ.get("INFLUXDB_PORT")

|

|

||||||

if influxdb_port:

|

|

||||||

icargs["port"] = int(influxdb_port)

|

|

||||||

influxdb_user = os.environ.get("INFLUXDB_USER")

|

|

||||||

if influxdb_user:

|

|

||||||

icargs["username"] = influxdb_user

|

|

||||||

influxdb_pwd = os.environ.get("INFLUXDB_PWD")

|

|

||||||

if influxdb_pwd:

|

|

||||||

icargs["password"] = influxdb_pwd

|

|

||||||

influxdb_db = os.environ.get("INFLUXDB_DB")

|

|

||||||

if influxdb_db:

|

|

||||||

icargs["database"] = influxdb_db

|

|

||||||

influxdb_rp = os.environ.get("INFLUXDB_RP")

|

|

||||||

if influxdb_rp:

|

|

||||||

rp = influxdb_rp

|

|

||||||

influxdb_ssl = os.environ.get("INFLUXDB_SSL")

|

|

||||||

if influxdb_ssl:

|

|

||||||

icargs["ssl"] = True

|

|

||||||

if influxdb_ssl.lower() == "secure":

|

|

||||||

icargs["verify_ssl"] = True

|

|

||||||

elif influxdb_ssl.lower() == "insecure":

|

|

||||||

icargs["verify_ssl"] = False

|

|

||||||

else:

|

|

||||||

icargs["verify_ssl"] = influxdb_ssl

|

|

||||||

|

|

||||||

if not arg_error:

|

|

||||||

if len(args) > 0:

|

|

||||||

arg_error = True

|

|

||||||

else:

|

|

||||||

for opt, arg in opts:

|

|

||||||

if opt == "-a":

|

|

||||||

samples = -1

|

|

||||||

elif opt == "-b":

|

|

||||||

bulk_mode = True

|

|

||||||

elif opt == "-h":

|

|

||||||

print_usage = True

|

|

||||||

elif opt == "-k":

|

|

||||||

bulk_skip_query = True

|

|

||||||

elif opt == "-n":

|

|

||||||

icargs["host"] = arg

|

|

||||||

elif opt == "-p":

|

|

||||||

icargs["port"] = int(arg)

|

|

||||||

elif opt == "-r":

|

|

||||||

run_lengths = True

|

|

||||||

elif opt == "-s":

|

|

||||||

samples = int(arg)

|

|

||||||

elif opt == "-t":

|

|

||||||

loop_time = float(arg)

|

|

||||||

elif opt == "-v":

|

|

||||||

verbose = True

|

|

||||||

elif opt == "-C":

|

|

||||||

icargs["ssl"] = True

|

|

||||||

icargs["verify_ssl"] = arg

|

|

||||||

elif opt == "-D":

|

|

||||||

icargs["database"] = arg

|

|

||||||

elif opt == "-I":

|

|

||||||

icargs["ssl"] = True

|

|

||||||

icargs["verify_ssl"] = False

|

|

||||||

elif opt == "-P":

|

|

||||||

icargs["password"] = arg

|

|

||||||

elif opt == "-R":

|

|

||||||

rp = arg

|

|

||||||

elif opt == "-S":

|

|

||||||

icargs["ssl"] = True

|

|

||||||

icargs["verify_ssl"] = True

|

|

||||||

elif opt == "-U":

|

|

||||||

icargs["username"] = arg

|

|

||||||

|

|

||||||

if "password" in icargs and "username" not in icargs:

|

|

||||||

print("Password authentication requires username to be set")

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

if print_usage or arg_error:

|

|

||||||

print("Usage: " + sys.argv[0] + " [options...]")

|

|

||||||

print("Options:")

|

|

||||||

print(" -a: Parse all valid samples")

|

|

||||||

print(" -b: Bulk mode: write individual sample data instead of summary stats")

|

|

||||||

print(" -h: Be helpful")

|

|

||||||

print(" -k: Skip querying for prior sample write point in bulk mode")

|

|

||||||

print(" -n <name>: Hostname of InfluxDB server, default: " + host_default)

|

|

||||||

print(" -p <num>: Port number to use on InfluxDB server")

|

|

||||||

print(" -r: Include ping drop run length stats")

|

|

||||||

print(" -s <num>: Number of data samples to parse; in bulk mode, applies to first")

|

|

||||||

print(" loop iteration only, default: -1 in bulk mode, loop interval if")

|

|

||||||

print(" loop interval set, else " + str(samples_default))

|

|

||||||

print(" -t <num>: Loop interval in seconds or 0 for no loop, default: " +

|

|

||||||

str(default_loop_time))

|

|

||||||

print(" -v: Be verbose")

|

|

||||||

print(" -C <filename>: Enable SSL/TLS using specified CA cert to verify server")

|

|

||||||

print(" -D <name>: Database name to use, default: " + database_default)

|

|

||||||

print(" -I: Enable SSL/TLS but disable certificate verification (INSECURE!)")

|

|

||||||

print(" -P <word>: Set password for authentication")

|

|

||||||

print(" -R <name>: Retention policy name to use")

|

|

||||||

print(" -S: Enable SSL/TLS using default CA cert")

|

|

||||||

print(" -U <name>: Set username for authentication")

|

|

||||||

sys.exit(1 if arg_error else 0)

|

|

||||||

|

|

||||||

if samples is None:

|

|

||||||

samples = -1 if bulk_mode else int(loop_time) if loop_time > 0 else samples_default

|

|

||||||

|

|

||||||

logging.basicConfig(format="%(levelname)s: %(message)s")

|

|

||||||

|

|

||||||

class GlobalState:

|

|

||||||

pass

|

|

||||||

|

|

||||||

gstate = GlobalState()

|

|

||||||

gstate.dish_id = None

|

|

||||||

gstate.points = []

|

|

||||||

gstate.counter = None

|

|

||||||

gstate.timestamp = None

|

|

||||||

gstate.query_done = bulk_skip_query

|

|

||||||

|

|

||||||

def conn_error(msg, *args):

|

|

||||||

# Connection errors that happen in an interval loop are not critical

|

|

||||||

# failures, but are interesting enough to print in non-verbose mode.

|

|

||||||

if loop_time > 0:

|

|

||||||

print(msg % args)

|

|

||||||

else:

|

|

||||||

logging.error(msg, *args)

|

|

||||||

|

|

||||||

def flush_points(client):

|

|

||||||

# Don't flush points to server if the counter query failed, since some

|

|

||||||

# may be discarded later. Write would probably fail, too, anyway.

|

|

||||||

if bulk_mode and not gstate.query_done:

|

|

||||||

return 1

|

|

||||||

|

|

||||||

try:

|

|

||||||

while len(gstate.points) > max_batch:

|

|

||||||

client.write_points(gstate.points[:max_batch],

|

|

||||||

time_precision="s",

|

|

||||||

retention_policy=rp)

|

|

||||||

if verbose:

|

|

||||||

print("Data points written: " + str(max_batch))

|

|

||||||

del gstate.points[:max_batch]

|

|

||||||

if gstate.points:

|

|

||||||

client.write_points(gstate.points, time_precision="s", retention_policy=rp)

|

|

||||||

if verbose:

|

|

||||||

print("Data points written: " + str(len(gstate.points)))

|

|

||||||

gstate.points.clear()

|

|

||||||

except Exception as e:

|

|

||||||

conn_error("Failed writing to InfluxDB database: %s", str(e))

|

|

||||||

# If failures persist, don't just use infinite memory. Max queue

|

|

||||||

# is currently 10 days of bulk data, so something is very wrong

|

|

||||||

# if it's ever exceeded.

|

|

||||||

if len(gstate.points) > MAX_QUEUE_LENGTH:

|

|

||||||

logging.error("Max write queue exceeded, discarding data.")

|

|

||||||

del gstate.points[:-MAX_QUEUE_LENGTH]

|

|

||||||

return 1

|

|

||||||

|

|

||||||

return 0

|

|

||||||

|

|

||||||

def query_counter(client, now, len_points):

|

|

||||||

try:

|

|

||||||

# fetch the latest point where counter field was recorded

|

|

||||||

result = client.query("SELECT counter FROM \"{0}\" "

|

|

||||||

"WHERE time>={1}s AND time<{2}s AND id=$id "

|

|

||||||

"ORDER by time DESC LIMIT 1;".format(

|

|

||||||

BULK_MEASUREMENT, now - len_points, now),

|

|

||||||

bind_params={"id": gstate.dish_id},

|

|

||||||

epoch="s")

|

|

||||||

rpoints = list(result.get_points())

|

|

||||||

if rpoints:

|

|

||||||

counter = rpoints[0].get("counter", None)

|

|

||||||

timestamp = rpoints[0].get("time", 0)

|

|

||||||

if counter and timestamp:

|

|

||||||

return int(counter), int(timestamp)

|

|

||||||

except TypeError as e:

|

|

||||||

# bind_params was added in influxdb-python v5.2.3. That would be

|

|

||||||

# easy enough to work around, but older versions had other problems

|

|

||||||

# with query(), so just skip this functionality.

|

|

||||||

logging.error(

|

|

||||||

"Failed running query, probably due to influxdb-python version too old. "

|

|

||||||

"Skipping resumption from prior counter value. Reported error was: %s", str(e))

|

|

||||||

|

|

||||||

return None, 0

|

|

||||||

|

|

||||||

def process_bulk_data(client):

|

|

||||||

before = time.time()

|

|

||||||

|

|

||||||

start = gstate.counter

|

|

||||||

parse_samples = samples if start is None else -1

|

|

||||||

general, bulk = starlink_grpc.history_bulk_data(parse_samples, start=start, verbose=verbose)

|

|

||||||

|

|

||||||

after = time.time()

|

|

||||||

parsed_samples = general["samples"]

|

|

||||||

new_counter = general["end_counter"]

|

|

||||||

timestamp = gstate.timestamp

|

|

||||||

# check this first, so it doesn't report as lost time sync

|

|

||||||

if gstate.counter is not None and new_counter != gstate.counter + parsed_samples:

|

|

||||||

timestamp = None

|

|

||||||

# Allow up to 2 seconds of time drift before forcibly re-syncing, since

|

|

||||||

# +/- 1 second can happen just due to scheduler timing.

|

|

||||||

if timestamp is not None and not before - 2.0 <= timestamp + parsed_samples <= after + 2.0:

|

|

||||||

if verbose:

|

|

||||||

print("Lost sample time sync at: " +

|

|

||||||

str(datetime.fromtimestamp(timestamp + parsed_samples, tz=timezone.utc)))

|

|

||||||

timestamp = None

|

|

||||||

if timestamp is None:

|

|

||||||

timestamp = int(before)

|

|

||||||

if verbose and gstate.query_done:

|

|

||||||

print("Establishing new time base: {0} -> {1}".format(

|

|

||||||

new_counter, datetime.fromtimestamp(timestamp, tz=timezone.utc)))

|

|

||||||

timestamp -= parsed_samples

|

|

||||||

|

|

||||||

for i in range(parsed_samples):

|

|

||||||

timestamp += 1

|

|

||||||

gstate.points.append({

|

|

||||||

"measurement": BULK_MEASUREMENT,

|

|

||||||

"tags": {

|

|

||||||

"id": gstate.dish_id

|

|

||||||

},

|

|

||||||

"time": timestamp,

|

|

||||||

"fields": {k: v[i] for k, v in bulk.items() if v[i] is not None},

|

|

||||||

})

|

|

||||||

|

|

||||||

# save off counter value for script restart

|

|

||||||

if parsed_samples:

|

|

||||||

gstate.points[-1]["fields"]["counter"] = new_counter

|

|

||||||

|

|

||||||

gstate.counter = new_counter

|

|

||||||

gstate.timestamp = timestamp

|

|

||||||

|

|

||||||

# This is here and not before the points being processed because if the

|

|

||||||

# query previously failed, there will be points that were processed in

|

|

||||||

# a prior loop. This avoids having to handle that as a special case.

|

|

||||||

if not gstate.query_done:

|

|

||||||

try:

|

|

||||||

db_counter, db_timestamp = query_counter(client, timestamp, len(gstate.points))

|

|

||||||

except Exception as e:

|

|

||||||

# could be temporary outage, so try again next time

|

|

||||||

conn_error("Failed querying InfluxDB for prior count: %s", str(e))

|

|

||||||

return

|

|

||||||

gstate.query_done = True

|

|

||||||

start_counter = new_counter - len(gstate.points)

|

|

||||||

if db_counter and start_counter <= db_counter < new_counter:

|

|

||||||

del gstate.points[:db_counter - start_counter]

|

|

||||||

if before - 2.0 <= db_timestamp + len(gstate.points) <= after + 2.0:

|

|

||||||

if verbose:

|

|

||||||

print("Using existing time base: {0} -> {1}".format(

|

|

||||||

db_counter, datetime.fromtimestamp(db_timestamp, tz=timezone.utc)))

|

|

||||||

for point in gstate.points:

|

|

||||||

db_timestamp += 1

|

|

||||||

point["time"] = db_timestamp

|

|

||||||

gstate.timestamp = db_timestamp

|

|

||||||

return

|

|

||||||

if verbose:

|

|

||||||

print("Establishing new time base: {0} -> {1}".format(

|

|

||||||

new_counter, datetime.fromtimestamp(timestamp, tz=timezone.utc)))

|

|

||||||

|

|

||||||

def process_ping_stats():

|

|

||||||

timestamp = time.time()

|

|

||||||

|

|

||||||

general, pd_stats, rl_stats = starlink_grpc.history_ping_stats(samples, verbose)

|

|

||||||

|

|

||||||

all_stats = general.copy()

|

|

||||||

all_stats.update(pd_stats)

|

|

||||||

if run_lengths:

|

|

||||||

for k, v in rl_stats.items():

|

|

||||||

if k.startswith("run_"):

|

|

||||||

for i, subv in enumerate(v, start=1):

|

|

||||||

all_stats[k + "_" + str(i)] = subv

|

|

||||||

else:

|

|

||||||

all_stats[k] = v

|

|

||||||

|

|

||||||

gstate.points.append({

|

|

||||||

"measurement": PING_MEASUREMENT,

|

|

||||||

"tags": {

|

|

||||||

"id": gstate.dish_id

|

|

||||||

},

|

|

||||||

"time": int(timestamp),

|

|

||||||

"fields": all_stats,

|

|

||||||

})

|

|

||||||

|

|

||||||

def loop_body(client):

|

|

||||||

if gstate.dish_id is None:

|

|

||||||

try:

|

|

||||||

gstate.dish_id = starlink_grpc.get_id()

|

|

||||||

if verbose:

|

|

||||||

print("Using dish ID: " + gstate.dish_id)

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

conn_error("Failure getting dish ID: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

if bulk_mode:

|

|

||||||

try:

|

|

||||||

process_bulk_data(client)

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

conn_error("Failure getting history: %s", str(e))

|

|

||||||

return 1

|

|

||||||

else:

|

|

||||||

try:

|

|

||||||

process_ping_stats()

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

conn_error("Failure getting ping stats: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

if verbose:

|

|

||||||

print("Data points queued: " + str(len(gstate.points)))

|

|

||||||

|

|

||||||

if len(gstate.points) >= flush_limit:

|

|

||||||

return flush_points(client)

|

|

||||||

|

|

||||||

return 0

|

|

||||||

|

|

||||||

if "verify_ssl" in icargs and not icargs["verify_ssl"]:

|

|

||||||

# user has explicitly said be insecure, so don't warn about it

|

|

||||||

warnings.filterwarnings("ignore", message="Unverified HTTPS request")

|

|

||||||

|

|

||||||

signal.signal(signal.SIGTERM, handle_sigterm)

|

|

||||||

try:

|

|

||||||

# attempt to hack around breakage between influxdb-python client and 2.0 server:

|

|

||||||

influx_client = InfluxDBClient(**icargs, headers={"Accept": "application/json"})

|

|

||||||

except TypeError:

|

|

||||||

# ...unless influxdb-python package version is too old

|

|

||||||

influx_client = InfluxDBClient(**icargs)

|

|

||||||

try:

|

|

||||||

next_loop = time.monotonic()

|

|

||||||

while True:

|

|

||||||

rc = loop_body(influx_client)

|

|

||||||

if loop_time > 0:

|

|

||||||

now = time.monotonic()

|

|

||||||

next_loop = max(next_loop + loop_time, now)

|

|

||||||

time.sleep(next_loop - now)

|

|

||||||

else:

|

|

||||||

break

|

|

||||||

except Terminated:

|

|

||||||

pass

|

|

||||||

finally:

|

|

||||||

if gstate.points:

|

|

||||||

rc = flush_points(influx_client)

|

|

||||||

influx_client.close()

|

|

||||||

|

|

||||||

sys.exit(rc)

|

|

||||||

|

|

||||||

|

|

||||||

if __name__ == '__main__':

|

|

||||||

main()

|

|

||||||

|

|

@ -1,185 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Publish Starlink user terminal packet loss statistics to a MQTT

|

|

||||||

# broker.

|

|

||||||

#

|

|

||||||

# This script examines the most recent samples from the history data,

|

|

||||||

# computes several different metrics related to packet loss, and

|

|

||||||

# publishes those to the specified MQTT broker.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

|

|

||||||

import getopt

|

|

||||||

import logging

|

|

||||||

import sys

|

|

||||||

import time

|

|

||||||

|

|

||||||

try:

|

|

||||||

import ssl

|

|

||||||

ssl_ok = True

|

|

||||||

except ImportError:

|

|

||||||

ssl_ok = False

|

|

||||||

|

|

||||||

import paho.mqtt.publish

|

|

||||||

|

|

||||||

import starlink_grpc

|

|

||||||

|

|

||||||

|

|

||||||

def main():

|

|

||||||

arg_error = False

|

|

||||||

|

|

||||||

try:

|

|

||||||

opts, args = getopt.getopt(sys.argv[1:], "ahn:p:rs:t:vC:ISP:U:")

|

|

||||||

except getopt.GetoptError as err:

|

|

||||||

print(str(err))

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

# Default to 1 hour worth of data samples.

|

|

||||||

samples_default = 3600

|

|

||||||

samples = None

|

|

||||||

print_usage = False

|

|

||||||

verbose = False

|

|

||||||

default_loop_time = 0

|

|

||||||

loop_time = default_loop_time

|

|

||||||

run_lengths = False

|

|

||||||

host_default = "localhost"

|

|

||||||

mqargs = {"hostname": host_default}

|

|

||||||

username = None

|

|

||||||

password = None

|

|

||||||

|

|

||||||

if not arg_error:

|

|

||||||

if len(args) > 0:

|

|

||||||

arg_error = True

|

|

||||||

else:

|

|

||||||

for opt, arg in opts:

|

|

||||||

if opt == "-a":

|

|

||||||

samples = -1

|

|

||||||

elif opt == "-h":

|

|

||||||

print_usage = True

|

|

||||||

elif opt == "-n":

|

|

||||||

mqargs["hostname"] = arg

|

|

||||||

elif opt == "-p":

|

|

||||||

mqargs["port"] = int(arg)

|

|

||||||

elif opt == "-r":

|

|

||||||

run_lengths = True

|

|

||||||

elif opt == "-s":

|

|

||||||

samples = int(arg)

|

|

||||||

elif opt == "-t":

|

|

||||||

loop_time = float(arg)

|

|

||||||

elif opt == "-v":

|

|

||||||

verbose = True

|

|

||||||

elif opt == "-C":

|

|

||||||

mqargs["tls"] = {"ca_certs": arg}

|

|

||||||

elif opt == "-I":

|

|

||||||

if ssl_ok:

|

|

||||||

mqargs["tls"] = {"cert_reqs": ssl.CERT_NONE}

|

|

||||||

else:

|

|

||||||

print("No SSL support found")

|

|

||||||

sys.exit(1)

|

|

||||||

elif opt == "-P":

|

|

||||||

password = arg

|

|

||||||

elif opt == "-S":

|

|

||||||

mqargs["tls"] = {}

|

|

||||||

elif opt == "-U":

|

|

||||||

username = arg

|

|

||||||

|

|

||||||

if username is None and password is not None:

|

|

||||||

print("Password authentication requires username to be set")

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

if print_usage or arg_error:

|

|

||||||

print("Usage: " + sys.argv[0] + " [options...]")

|

|

||||||

print("Options:")

|

|

||||||

print(" -a: Parse all valid samples")

|

|

||||||

print(" -h: Be helpful")

|

|

||||||

print(" -n <name>: Hostname of MQTT broker, default: " + host_default)

|

|

||||||

print(" -p <num>: Port number to use on MQTT broker")

|

|

||||||

print(" -r: Include ping drop run length stats")

|

|

||||||

print(" -s <num>: Number of data samples to parse, default: loop interval,")

|

|

||||||

print(" if set, else " + str(samples_default))

|

|

||||||

print(" -t <num>: Loop interval in seconds or 0 for no loop, default: " +

|

|

||||||

str(default_loop_time))

|

|

||||||

print(" -v: Be verbose")

|

|

||||||

print(" -C <filename>: Enable SSL/TLS using specified CA cert to verify broker")

|

|

||||||

print(" -I: Enable SSL/TLS but disable certificate verification (INSECURE!)")

|

|

||||||

print(" -P: Set password for username/password authentication")

|

|

||||||

print(" -S: Enable SSL/TLS using default CA cert")

|

|

||||||

print(" -U: Set username for authentication")

|

|

||||||

sys.exit(1 if arg_error else 0)

|

|

||||||

|

|

||||||

if samples is None:

|

|

||||||

samples = int(loop_time) if loop_time > 0 else samples_default

|

|

||||||

|

|

||||||

if username is not None:

|

|

||||||

mqargs["auth"] = {"username": username}

|

|

||||||

if password is not None:

|

|

||||||

mqargs["auth"]["password"] = password

|

|

||||||

|

|

||||||

logging.basicConfig(format="%(levelname)s: %(message)s")

|

|

||||||

|

|

||||||

class GlobalState:

|

|

||||||

pass

|

|

||||||

|

|

||||||

gstate = GlobalState()

|

|

||||||

gstate.dish_id = None

|

|

||||||

|

|

||||||

def conn_error(msg, *args):

|

|

||||||

# Connection errors that happen in an interval loop are not critical

|

|

||||||

# failures, but are interesting enough to print in non-verbose mode.

|

|

||||||

if loop_time > 0:

|

|

||||||

print(msg % args)

|

|

||||||

else:

|

|

||||||

logging.error(msg, *args)

|

|

||||||

|

|

||||||

def loop_body():

|

|

||||||

if gstate.dish_id is None:

|

|

||||||

try:

|

|

||||||

gstate.dish_id = starlink_grpc.get_id()

|

|

||||||

if verbose:

|

|

||||||

print("Using dish ID: " + gstate.dish_id)

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

conn_error("Failure getting dish ID: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

try:

|

|

||||||

g_stats, pd_stats, rl_stats = starlink_grpc.history_ping_stats(samples, verbose)

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

conn_error("Failure getting ping stats: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

topic_prefix = "starlink/dish_ping_stats/" + gstate.dish_id + "/"

|

|

||||||

msgs = [(topic_prefix + k, v, 0, False) for k, v in g_stats.items()]

|

|

||||||

msgs.extend([(topic_prefix + k, v, 0, False) for k, v in pd_stats.items()])

|

|

||||||

if run_lengths:

|

|

||||||

for k, v in rl_stats.items():

|

|

||||||

if k.startswith("run_"):

|

|

||||||

msgs.append((topic_prefix + k, ",".join(str(x) for x in v), 0, False))

|

|

||||||

else:

|

|

||||||

msgs.append((topic_prefix + k, v, 0, False))

|

|

||||||

|

|

||||||

try:

|

|

||||||

paho.mqtt.publish.multiple(msgs, client_id=gstate.dish_id, **mqargs)

|

|

||||||

if verbose:

|

|

||||||

print("Successfully published to MQTT broker")

|

|

||||||

except Exception as e:

|

|

||||||

conn_error("Failed publishing to MQTT broker: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

return 0

|

|

||||||

|

|

||||||

next_loop = time.monotonic()

|

|

||||||

while True:

|

|

||||||

rc = loop_body()

|

|

||||||

if loop_time > 0:

|

|

||||||

now = time.monotonic()

|

|

||||||

next_loop = max(next_loop + loop_time, now)

|

|

||||||

time.sleep(next_loop - now)

|

|

||||||

else:

|

|

||||||

break

|

|

||||||

|

|

||||||

sys.exit(rc)

|

|

||||||

|

|

||||||

|

|

||||||

if __name__ == '__main__':

|

|

||||||

main()

|

|

||||||

|

|

@ -1,151 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Equivalent script to parseJsonHistory.py, except integrating the

|

|

||||||

# gRPC calls, instead of relying on separate invocation of grpcurl.

|

|

||||||

#

|

|

||||||

# This script examines the most recent samples from the history data

|

|

||||||

# and computes several different metrics related to packet loss. By

|

|

||||||

# default, it will print the results in CSV format.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

|

|

||||||

import datetime

|

|

||||||

import getopt

|

|

||||||

import logging

|

|

||||||

import sys

|

|

||||||

import time

|

|

||||||

|

|

||||||

import starlink_grpc

|

|

||||||

|

|

||||||

|

|

||||||

def main():

|

|

||||||

arg_error = False

|

|

||||||

|

|

||||||

try:

|

|

||||||

opts, args = getopt.getopt(sys.argv[1:], "ahrs:t:vH")

|

|

||||||

except getopt.GetoptError as err:

|

|

||||||

print(str(err))

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

# Default to 1 hour worth of data samples.

|

|

||||||

samples_default = 3600

|

|

||||||

samples = None

|

|

||||||

print_usage = False

|

|

||||||

verbose = False

|

|

||||||

default_loop_time = 0

|

|

||||||

loop_time = default_loop_time

|

|

||||||

run_lengths = False

|

|

||||||

print_header = False

|

|

||||||

|

|

||||||

if not arg_error:

|

|

||||||

if len(args) > 0:

|

|

||||||

arg_error = True

|

|

||||||

else:

|

|

||||||

for opt, arg in opts:

|

|

||||||

if opt == "-a":

|

|

||||||

samples = -1

|

|

||||||

elif opt == "-h":

|

|

||||||

print_usage = True

|

|

||||||

elif opt == "-r":

|

|

||||||

run_lengths = True

|

|

||||||

elif opt == "-s":

|

|

||||||

samples = int(arg)

|

|

||||||

elif opt == "-t":

|

|

||||||

loop_time = float(arg)

|

|

||||||

elif opt == "-v":

|

|

||||||

verbose = True

|

|

||||||

elif opt == "-H":

|

|

||||||

print_header = True

|

|

||||||

|

|

||||||

if print_usage or arg_error:

|

|

||||||

print("Usage: " + sys.argv[0] + " [options...]")

|

|

||||||

print("Options:")

|

|

||||||

print(" -a: Parse all valid samples")

|

|

||||||

print(" -h: Be helpful")

|

|

||||||

print(" -r: Include ping drop run length stats")

|

|

||||||

print(" -s <num>: Number of data samples to parse, default: loop interval,")

|

|

||||||

print(" if set, else " + str(samples_default))

|

|

||||||

print(" -t <num>: Loop interval in seconds or 0 for no loop, default: " +

|

|

||||||

str(default_loop_time))

|

|

||||||

print(" -v: Be verbose")

|

|

||||||

print(" -H: print CSV header instead of parsing history data")

|

|

||||||

sys.exit(1 if arg_error else 0)

|

|

||||||

|

|

||||||

if samples is None:

|

|

||||||

samples = int(loop_time) if loop_time > 0 else samples_default

|

|

||||||

|

|

||||||

logging.basicConfig(format="%(levelname)s: %(message)s")

|

|

||||||

|

|

||||||

g_fields, pd_fields, rl_fields = starlink_grpc.history_ping_field_names()

|

|

||||||

|

|

||||||

if print_header:

|

|

||||||

header = ["datetimestamp_utc"]

|

|

||||||

header.extend(g_fields)

|

|

||||||

header.extend(pd_fields)

|

|

||||||

if run_lengths:

|

|

||||||

for field in rl_fields:

|

|

||||||

if field.startswith("run_"):

|

|

||||||

header.extend(field + "_" + str(x) for x in range(1, 61))

|

|

||||||

else:

|

|

||||||

header.append(field)

|

|

||||||

print(",".join(header))

|

|

||||||

sys.exit(0)

|

|

||||||

|

|

||||||

def loop_body():

|

|

||||||

timestamp = datetime.datetime.utcnow()

|

|

||||||

|

|

||||||

try:

|

|

||||||

g_stats, pd_stats, rl_stats = starlink_grpc.history_ping_stats(samples, verbose)

|

|

||||||

except starlink_grpc.GrpcError as e:

|

|

||||||

logging.error("Failure getting ping stats: %s", str(e))

|

|

||||||

return 1

|

|

||||||

|

|

||||||

if verbose:

|

|

||||||

print("Parsed samples: " + str(g_stats["samples"]))

|

|

||||||

print("Total ping drop: " + str(pd_stats["total_ping_drop"]))

|

|

||||||

print("Count of drop == 1: " + str(pd_stats["count_full_ping_drop"]))

|

|

||||||

print("Obstructed: " + str(pd_stats["count_obstructed"]))

|

|

||||||

print("Obstructed ping drop: " + str(pd_stats["total_obstructed_ping_drop"]))

|

|

||||||

print("Obstructed drop == 1: " + str(pd_stats["count_full_obstructed_ping_drop"]))

|

|

||||||

print("Unscheduled: " + str(pd_stats["count_unscheduled"]))

|

|

||||||

print("Unscheduled ping drop: " + str(pd_stats["total_unscheduled_ping_drop"]))

|

|

||||||

print("Unscheduled drop == 1: " + str(pd_stats["count_full_unscheduled_ping_drop"]))

|

|

||||||

if run_lengths:

|

|

||||||

print("Initial drop run fragment: " + str(rl_stats["init_run_fragment"]))

|

|

||||||

print("Final drop run fragment: " + str(rl_stats["final_run_fragment"]))

|

|

||||||

print("Per-second drop runs: " +

|

|

||||||

", ".join(str(x) for x in rl_stats["run_seconds"]))

|

|

||||||

print("Per-minute drop runs: " +

|

|

||||||

", ".join(str(x) for x in rl_stats["run_minutes"]))

|

|

||||||

if loop_time > 0:

|

|

||||||

print()

|

|

||||||

else:

|

|

||||||

csv_data = [timestamp.replace(microsecond=0).isoformat()]

|

|

||||||

csv_data.extend(str(g_stats[field]) for field in g_fields)

|

|

||||||

csv_data.extend(str(pd_stats[field]) for field in pd_fields)

|

|

||||||

if run_lengths:

|

|

||||||

for field in rl_fields:

|

|

||||||

if field.startswith("run_"):

|

|

||||||

csv_data.extend(str(substat) for substat in rl_stats[field])

|

|

||||||

else:

|

|

||||||

csv_data.append(str(rl_stats[field]))

|

|

||||||

print(",".join(csv_data))

|

|

||||||

|

|

||||||

return 0

|

|

||||||

|

|

||||||

next_loop = time.monotonic()

|

|

||||||

while True:

|

|

||||||

rc = loop_body()

|

|

||||||

if loop_time > 0:

|

|

||||||

now = time.monotonic()

|

|

||||||

next_loop = max(next_loop + loop_time, now)

|

|

||||||

time.sleep(next_loop - now)

|

|

||||||

else:

|

|

||||||

break

|

|

||||||

|

|

||||||

sys.exit(rc)

|

|

||||||

|

|

||||||

|

|

||||||

if __name__ == '__main__':

|

|

||||||

main()

|

|

||||||

147

dishStatusCsv.py

147

dishStatusCsv.py

|

|

@ -1,147 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Output Starlink user terminal status info in CSV format.

|

|

||||||

#

|

|

||||||

# This script pulls the current status and prints to stdout either

|

|

||||||

# once or in a periodic loop.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

|

|

||||||

import datetime

|

|

||||||

import getopt

|

|

||||||

import logging

|

|

||||||

import sys

|

|

||||||

import time

|

|

||||||

|

|

||||||

import grpc

|

|

||||||

|

|

||||||

import spacex.api.device.device_pb2

|

|

||||||

import spacex.api.device.device_pb2_grpc

|

|

||||||

|

|

||||||

|

|

||||||

def main():

|

|

||||||

arg_error = False

|

|

||||||

|

|

||||||

try:

|

|

||||||

opts, args = getopt.getopt(sys.argv[1:], "ht:H")

|

|

||||||

except getopt.GetoptError as err:

|

|

||||||

print(str(err))

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

print_usage = False

|

|

||||||

default_loop_time = 0

|

|

||||||

loop_time = default_loop_time

|

|

||||||

print_header = False

|

|

||||||

|

|

||||||

if not arg_error:

|

|

||||||

if len(args) > 0:

|

|

||||||

arg_error = True

|

|

||||||

else:

|

|

||||||

for opt, arg in opts:

|

|

||||||

if opt == "-h":

|

|

||||||

print_usage = True

|

|

||||||

elif opt == "-t":

|

|

||||||

loop_time = float(arg)

|

|

||||||

elif opt == "-H":

|

|

||||||

print_header = True

|

|

||||||

|

|

||||||

if print_usage or arg_error:

|

|

||||||

print("Usage: " + sys.argv[0] + " [options...]")

|

|

||||||

print("Options:")

|

|

||||||

print(" -h: Be helpful")

|

|

||||||

print(" -t <num>: Loop interval in seconds or 0 for no loop, default: " +

|

|

||||||

str(default_loop_time))

|

|

||||||

print(" -H: print CSV header instead of parsing file")

|

|

||||||

sys.exit(1 if arg_error else 0)

|

|

||||||

|

|

||||||

logging.basicConfig(format="%(levelname)s: %(message)s")

|

|

||||||

|

|

||||||

if print_header:

|

|

||||||

header = [

|

|

||||||

"datetimestamp_utc",

|

|

||||||

"hardware_version",

|

|

||||||

"software_version",

|

|

||||||

"state",

|

|

||||||

"uptime",

|

|

||||||

"snr",

|

|

||||||

"seconds_to_first_nonempty_slot",

|

|

||||||

"pop_ping_drop_rate",

|

|

||||||

"downlink_throughput_bps",

|

|

||||||

"uplink_throughput_bps",

|

|

||||||

"pop_ping_latency_ms",

|

|

||||||

"alerts",

|

|

||||||

"fraction_obstructed",

|

|

||||||

"currently_obstructed",

|

|

||||||

"seconds_obstructed",

|

|

||||||

]

|

|

||||||

header.extend("wedges_fraction_obstructed_" + str(x) for x in range(12))

|

|

||||||

print(",".join(header))

|

|

||||||

sys.exit(0)

|

|

||||||

|

|

||||||

def loop_body():

|

|

||||||

timestamp = datetime.datetime.utcnow()

|

|

||||||

|

|

||||||

try:

|

|

||||||

with grpc.insecure_channel("192.168.100.1:9200") as channel:

|

|

||||||

stub = spacex.api.device.device_pb2_grpc.DeviceStub(channel)

|

|

||||||

response = stub.Handle(spacex.api.device.device_pb2.Request(get_status={}))

|

|

||||||

|

|

||||||

status = response.dish_get_status

|

|

||||||

|

|

||||||

# More alerts may be added in future, so rather than list them individually,

|

|

||||||

# build a bit field based on field numbers of the DishAlerts message.

|

|

||||||

alert_bits = 0

|

|

||||||

for alert in status.alerts.ListFields():

|

|

||||||

alert_bits |= (1 if alert[1] else 0) << (alert[0].number - 1)

|

|

||||||

|

|

||||||

csv_data = [

|

|

||||||

timestamp.replace(microsecond=0).isoformat(),

|

|

||||||

status.device_info.id,

|

|

||||||

status.device_info.hardware_version,

|

|

||||||

status.device_info.software_version,

|

|

||||||

spacex.api.device.dish_pb2.DishState.Name(status.state),

|

|

||||||

]

|

|

||||||

csv_data.extend(

|

|

||||||

str(x) for x in [

|

|

||||||

status.device_state.uptime_s,

|

|

||||||

status.snr,

|

|

||||||

status.seconds_to_first_nonempty_slot,

|

|

||||||

status.pop_ping_drop_rate,

|

|

||||||

status.downlink_throughput_bps,

|

|

||||||

status.uplink_throughput_bps,

|

|

||||||

status.pop_ping_latency_ms,

|

|

||||||

alert_bits,

|

|

||||||

status.obstruction_stats.fraction_obstructed,

|

|

||||||

status.obstruction_stats.currently_obstructed,

|

|

||||||

status.obstruction_stats.last_24h_obstructed_s,

|

|

||||||

])

|

|

||||||

csv_data.extend(str(x) for x in status.obstruction_stats.wedge_abs_fraction_obstructed)

|

|

||||||

rc = 0

|

|

||||||

except grpc.RpcError:

|

|

||||||

if loop_time <= 0:

|

|

||||||

logging.error("Failed getting status info")

|

|

||||||

csv_data = [

|

|

||||||

timestamp.replace(microsecond=0).isoformat(), "", "", "", "DISH_UNREACHABLE"

|

|

||||||

]

|

|

||||||

rc = 1

|

|

||||||

|

|

||||||

print(",".join(csv_data))

|

|

||||||

|

|

||||||

return rc

|

|

||||||

|

|

||||||

next_loop = time.monotonic()

|

|

||||||

while True:

|

|

||||||

rc = loop_body()

|

|

||||||

if loop_time > 0:

|

|

||||||

now = time.monotonic()

|

|

||||||

next_loop = max(next_loop + loop_time, now)

|

|

||||||

time.sleep(next_loop - now)

|

|

||||||

else:

|

|

||||||

break

|

|

||||||

|

|

||||||

sys.exit(rc)

|

|

||||||

|

|

||||||

|

|

||||||

if __name__ == '__main__':

|

|

||||||

main()

|

|

||||||

|

|

@ -1,283 +0,0 @@

|

||||||

#!/usr/bin/python3

|

|

||||||

######################################################################

|

|

||||||

#

|

|

||||||

# Write Starlink user terminal status info to an InfluxDB database.

|

|

||||||

#

|

|

||||||

# This script will poll current status and write it to the specified

|

|

||||||

# InfluxDB database either once or in a periodic loop.

|

|

||||||

#

|

|

||||||

######################################################################

|

|

||||||

|

|

||||||

import getopt

|

|

||||||

import logging

|

|

||||||

import os

|

|

||||||

import signal

|

|

||||||

import sys

|

|

||||||

import time

|

|

||||||

import warnings

|

|

||||||

|

|

||||||

import grpc

|

|

||||||

from influxdb import InfluxDBClient

|

|

||||||

from influxdb import SeriesHelper

|

|

||||||

|

|

||||||

import spacex.api.device.device_pb2

|

|

||||||

import spacex.api.device.device_pb2_grpc

|

|

||||||

|

|

||||||

|

|

||||||

class Terminated(Exception):

|

|

||||||

pass

|

|

||||||

|

|

||||||

|

|

||||||

def handle_sigterm(signum, frame):

|

|

||||||

# Turn SIGTERM into an exception so main loop can clean up

|

|

||||||

raise Terminated()

|

|

||||||

|

|

||||||

|

|

||||||

def main():

|

|

||||||

arg_error = False

|

|

||||||

|

|

||||||

try:

|

|

||||||

opts, args = getopt.getopt(sys.argv[1:], "hn:p:t:vC:D:IP:R:SU:")

|

|

||||||

except getopt.GetoptError as err:

|

|

||||||

print(str(err))

|

|

||||||

arg_error = True

|

|

||||||

|

|

||||||

print_usage = False

|

|

||||||

verbose = False

|

|

||||||

default_loop_time = 0

|

|

||||||